According to a new report from the Neustar International Security Council (NISC), over one-quarter of security alerts fielded within organizations are false positives. Surveying senior security professionals across five European countries and the U.S., the report highlights the need for more advanced and accurate security solutions to help reduce alert-wary cybersecurity teams overwhelmed by massive alert volumes.

Alert Fatigue and Its Causes

Following are some of the key highlights from the report:

More than 41% of organizations experience over 10,000 alerts a day. That said, many of them are not critical. Teams need to be able to quickly differentiate between low-fidelity alerts that clutter security analysts’ dashboards and those that pinpoint legitimate potential malicious activity. This expanding volume of low-fidelity alerts has become a source of “noise” that consumes valuable time — from developers to the security operations center (SOC). Thousands of hours can be wasted annually confirming if an alert is legitimate or a false positive.

While security tools may trigger alert notifications, this doesn’t mean the activity is malicious. Security configuration errors, inaccuracies in legacy detection tools, and improperly applied security control algorithms can all contribute to false-positive rates. Other contributing factors include:

- Lack of context in the alert generation process.

- Inability to consolidate and classify alerts.

Another reason for the deluge in alerts is the fact that many companies deploy multiple security controls that fail to correlate event data. Disparate events may not be linked, with the tools used by security analysts operating in separate silos with little consolidation. Log management and security information and event management (SIEM) systems can perform a correlation between separate products, yet they require significant customization to accurately report events.

Tools like these often require a security analyst to confirm the accuracy of the alert—namely, if it’s a true legitimate alert or false positive. While these types of solutions can coordinate and aggregate data to analyze alerts, they don’t address the challenges posed by high rates of false positives.

Further complicating matters are intrusion detection and prevention systems (IDS/IPS) that cannot accurately aggregate multiple alerts. For instance, if a single alert shows that an internal system attempted but failed to connect to an external IP address 50 times, most tools will generate 50 separate failed connection alerts, versus recognizing it as one repeated action.

Security Alert Overload Introduces Risk and Inefficiencies

The time it takes to investigate and validate a single alert can require a multitude of tools just to decide if an alert should be escalated. According to a report by CRITICALSTART, incident responders spend an average of 2.5 to 5 hours each day investigating alerts.

Unable to cope with the endless stream of alerts, security teams are tuning specific alert features to stem the stream of alerts to reduce volume. But this often ratchets up risk, as they may elect to ignore certain categories of alerts and turn off high-volume alert features.

As a result, one of the challenges development teams have in managing alert fatigue in application security (AppSec) is finding the right balance between setting liberal controls—that could potentially flood systems with alerts—and more stringent alert criteria that could find teams subject to false negatives.

While false positives may be annoying and burden teams with additional triage requests, false negatives tend to be more nefarious, because the functionality of an application that is tested is erroneously flagged as “passing” yet, in reality, it contains one or more vulnerabilities. For AppSec teams, the objective is having the ability to detect valid threats that provide quality alerts, supported by the context and evidence to inspect them accurately and continuously.

Reducing Alert Fatigue With Instrumented AppSec

Fortunately, technologies like instrumentation help automate security testing to reduce false positives and false negatives.

Instrumentation is the ability to record and measure information within an application without changing the application itself. Some current “flavors” of security instrumentation today include the following technologies:

- Software Composition Analysis (SCA).SCA performs inventory and assesses all open-source libraries

- Runtime Application Self-Protection (RASP). A RASP monitors threats and attacks while preventing vulnerabilities from being exploited.

- Interactive Application Security Testing (IAST). An IAST monitors applications for novel vulnerabilities in custom code and libraries.

By instrumenting an application with passive sensors, teams have more access to information about the application and its execution, delivering unprecedented levels of speed and accuracy in identifying vulnerabilities. This unique approach to modern AppSec produces the intelligence and evidence necessary to detect vulnerabilities with virtually no false positives and no false negatives.

At the end of the day, your security tools need to give you less, but significant, alerts that contain the correct intelligence to best inform your security and development teams. With technologies that use instrumentation, like SCA, IAST, and RASP, you can achieve high accuracy due to the visibility into an application and its runtime environment as code loads into memory to provide enhanced security logging for analytics.

Featured in DZone | June 1, 2020

![]()

Ransomware in 2025: The Real Risk, the Gaps That Persist, and What Actually Works

Ransomware attacks aren’t slowing down. They’re getting smarter, faster, and more expensive. In ...![]()

Security Operations Leaders: The Chaos Is Real

If you’re a CISO, SOC leader, or InfoSec pro, you’ve felt it. Alert volumes spike. Tools multipl...![]()

Transform Vulnerability Management: How Critical Start & Qualys Reduce Cyber Risk

In a recent webinar co-hosted by Qualys and Critical Start, experts from both organizations discusse...![]()

H2 2024 Cyber Threat Intelligence Report: Key Takeaways for Security Leaders

In a recent Critical Start webinar, cyber threat intelligence experts shared key findings from the H...![]()

Bridging the Cybersecurity Skills Gap with Critical Start’s MDR Expertise

During a recent webinar hosted by CyberEdge, Steven Rosenthal, Director of Product Management at Cri...![]()

2024: The Cybersecurity Year in Review

A CISO’s Perspective on the Evolving Threat Landscape and Strategic Response Introduction 2024 has...![]()

Modern MDR That Adapts to Your Needs: Tailored, Flexible Security for Today’s Threats

Every organization faces unique challenges in today’s dynamic threat landscape. Whether you’re m...![]()

Achieving Cyber Resilience with Integrated Threat Exposure Management

Welcome to the third and final installment of our three-part series Driving Cyber Resilience with Hu...Why Remote Containment and Active Response Are Non-Negotiables in MDR

You Don’t Have to Settle for MDR That Sucks Welcome to the second installment of our three-part bl...![]()

Choosing the Right MDR Solution: The Key to Peace of Mind and Operational Continuity

Imagine this: an attacker breaches your network, and while traditional defenses scramble to catch up...![]()

Redefining Cybersecurity Operations: How New Cyber Operations Risk & Response™ (CORR) platform Features Deliver Unmatched Efficiency and Risk Mitigation

The latest Cyber Operations Risk & Response™ (CORR) platform release introduces groundbreaking...![]()

The Rising Importance of Human Expertise in Cybersecurity

Welcome to Part 1 of our three-part series, Driving Cyber Resilience with Human-Driven MDR: Insights...![]()

Achieving True Protection with Complete Signal Coverage

Cybersecurity professionals know all too well that visibility into potential threats is no longer a ...![]()

Beyond Traditional MDR: Why Modern Organizations Need Advanced Threat Detection

You Don’t Have to Settle for MDR That Sucks Frustrated with the conventional security measures pro...The Power of Human-Driven Cybersecurity: Why Automation Alone Isn’t Enough

Cyber threats are increasingly sophisticated, and bad actors are attacking organizations with greate...Importance of SOC Signal Assurance in MDR Solutions

In the dynamic and increasingly complex field of cybersecurity, ensuring the efficiency and effectiv...The Hidden Risks: Unmonitored Assets and Their Impact on MDR Effectiveness

In the realm of cybersecurity, the effectiveness of Managed Detection and Response (MDR) services hi...![]()

The Need for Symbiotic Cybersecurity Strategies | Part 2: Integrating Proactive Security Intelligence into MDR

In Part 1 of this series, The Need for Symbiotic Cybersecurity Strategies, we explored the critical ...Finding the Right Candidate for Digital Forensics and Incident Response: What to Ask and Why During an Interview

So, you’re looking to add a digital forensics and incident response (DFIR) expert to your team. Gr...![]()

The Need for Symbiotic Cybersecurity Strategies | Part I

Since the 1980s, Detect and Respond cybersecurity solutions have evolved in response to emerging cyb...![]()

Critical Start H1 2024 Cyber Threat Intelligence Report

Critical Start is thrilled to announce the release of the Critical Start H1 2024 Cyber Threat Intell...![]()

Now Available! Critical Start Vulnerability Prioritization – Your Answer to Preemptive Cyber Defense.

Organizations understand that effective vulnerability management is critical to reducing their cyber...![]()

Recruiter phishing leads to more_eggs infection

With additional investigative and analytical contributions by Kevin Olson, Principal Security Analys...![]()

2024 Critical Start Cyber Risk Landscape Peer Report Now Available

We are excited to announce the release of the 2024 Critical Start Cyber Risk Landscape Peer Report, ...Critical Start Managed XDR Webinar — Increase Threat Protection, Reduce Risk, and Optimize Operational Costs

Did you miss our recent webinar, Stop Drowning in Logs: How Tailored Log Management and Premier Thre...Pulling the Unified Audit Log

During a Business Email Compromise (BEC) investigation, one of the most valuable logs is the Unified...![]()

Set Your Organization Up for Risk Reduction with the Critical Start Vulnerability Management Service

With cyber threats and vulnerabilities constantly evolving, it’s essential that organizations take...![]()

Announcing the Latest Cyber Threat Intelligence Report: Unveiling the New FakeBat Variant

Critical Start announces the release of its latest Cyber Threat Intelligence Report, focusing on a f...Cyber Risk Registers, Risk Dashboards, and Risk Lifecycle Management for Improved Risk Reduction

Just one of the daunting tasks Chief Information Security Officers (CISOs) face is identifying, trac...![]()

Beyond SIEM: Elevate Your Threat Protection with a Seamless User Experience

Unraveling Cybersecurity Challenges In our recent webinar, Beyond SIEM: Elevating Threat Prote...![]()

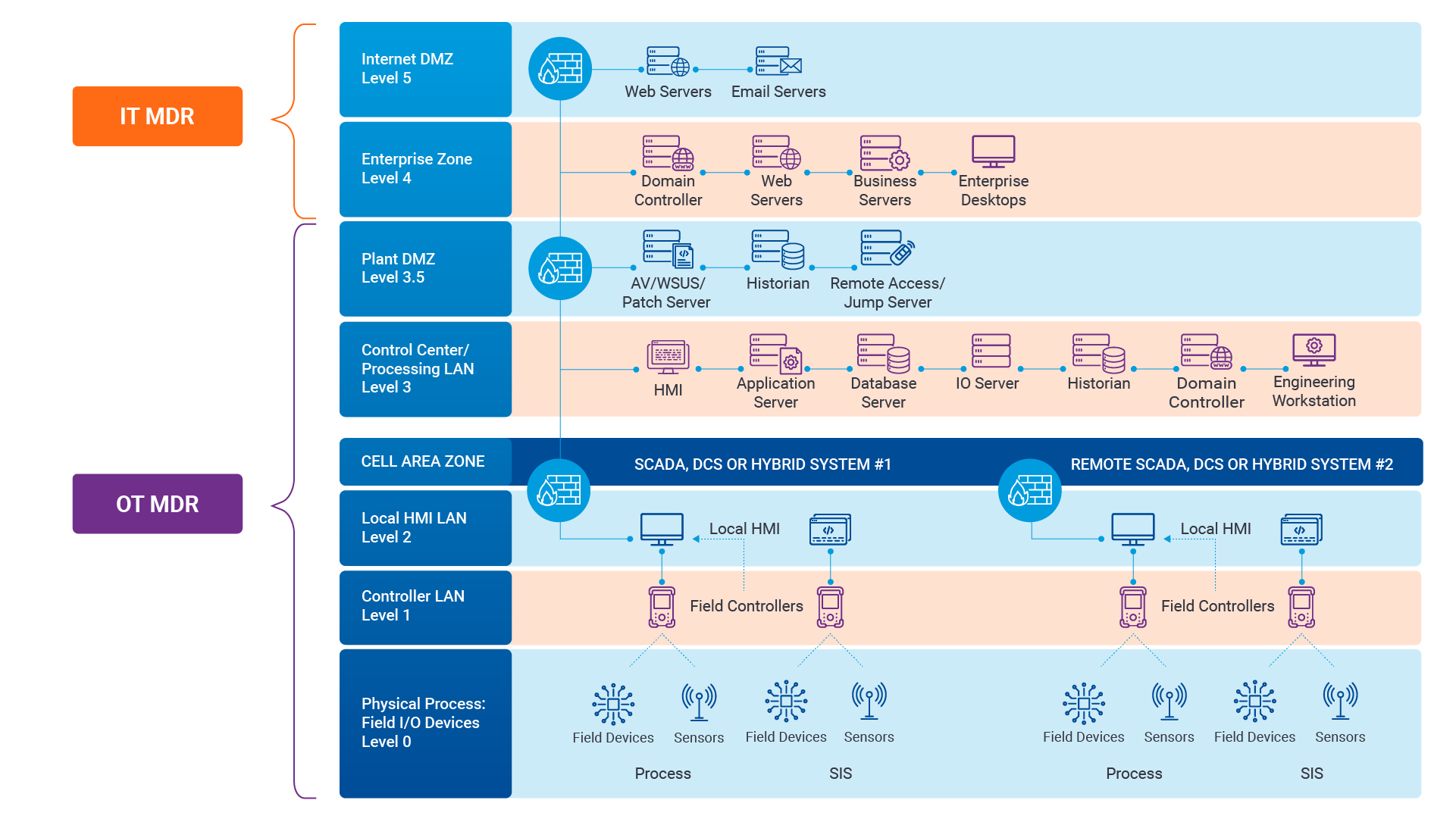

Navigating the Convergence of IT and OT Security to Monitor and Prevent Cyberattacks in Industrial Environments

The blog Mitigating Industry 4.0 Cyber Risks discussed how the continual digitization of the manufac...![]()

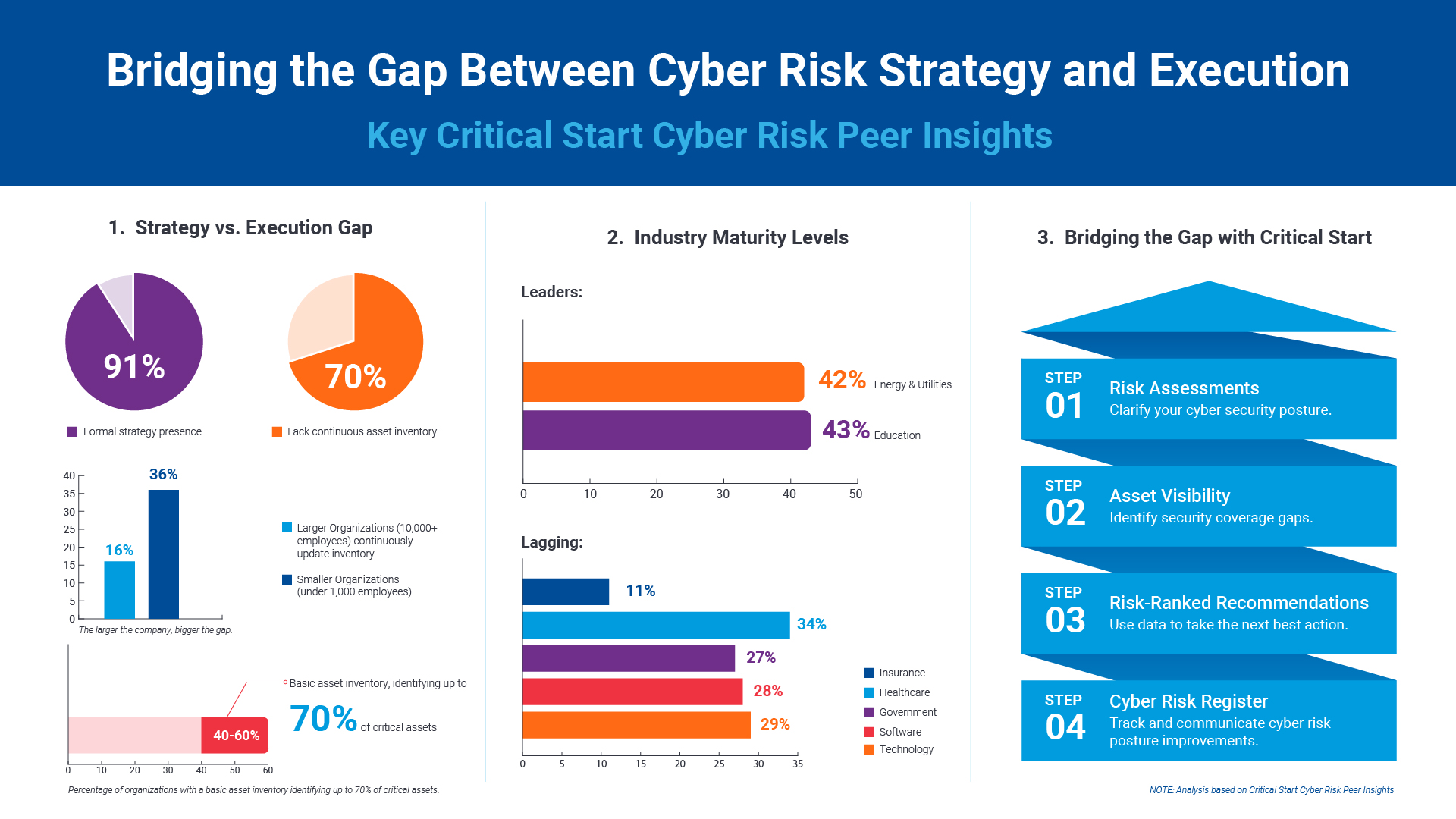

Critical Start Cyber Risk Peer Insights – Strategy vs. Execution

Effective cyber risk management is more crucial than ever for organizations across all industries. C...![]() Press Release

Press ReleaseCritical Start Named a Major Player in IDC MarketScape for Emerging Managed Detection and Response Services 2024

Critical Start is proud to be recognized as a Major Player in the IDC MarketScape: Worldwide Emergin...Introducing Free Quick Start Cyber Risk Assessments with Peer Benchmark Data

We asked industry leaders to name some of their biggest struggles around cyber risk, and they answer...Efficient Incident Response: Extracting and Analyzing Veeam .vbk Files for Forensic Analysis

Introduction Incident response requires a forensic analysis of available evidence from hosts and oth...![]()

Mitigating Industry 4.0 Cyber Risks

As the manufacturing industry progresses through the stages of the Fourth Industrial Revolution, fro...![]()

CISO Perspective with George Jones: Building a Resilient Vulnerability Management Program

In the evolving landscape of cybersecurity, the significance of vulnerability management cannot be o...![]()

Navigating the Cyber World: Understanding Risks, Vulnerabilities, and Threats

Cyber risks, cyber threats, and cyber vulnerabilities are closely related concepts, but each plays a...The Next Evolution in Cybersecurity — Combining Proactive and Reactive Controls for Superior Risk Management

Evolve Your Cybersecurity Program to a balanced approach that prioritizes both Reactive and Proactiv...![]()

CISO Perspective with George Jones: The Top 10 Metrics for Evaluating Asset Visibility Programs

Organizations face a multitude of threats ranging from sophisticated cyberattacks to regulatory comp...- eBook

Ditch the Black Box: Get Transparent MDR with Critical Start

Tired of MDR providers leaving you in the dark? We totally get it. Our eBook, Unmatched Transparency... - eBook

Why the Most Secure Organizations Choose Critical Start MDR

Frustrated with MDR providers that fall short? We totally get it. Critical Start delivers unmatched ... ![]()

Drowning in Alerts: How to Cut the Noise and Focus on Real Threats

92 percent of organizations say they’re overwhelmed by an endless sea of alerts. It’s not just...

Newsletter Signup

Stay up-to-date on the latest resources and news from CRITICALSTART.

Thanks for signing up!